R has appeared as a powerful tool for data analysis and visualization, especially in the realm of natural language processing in R. Even though Python remains the most popular language for NLP, R has its unique strengths and a suite of packages tailored for text analysis. In this comprehensive guide, we’ll delve deep into the world of natural language processing R, exploring its top packages, strengths, and why it’s a viable choice for NLP tasks.

Natural language processing (NLP) is a sub-field of artificial intelligence that focuses on the interaction between computers and humans through natural language. The ultimate goal is to read, decipher, understand, and make sense of human language in a manner that is valuable. R, with its rich ecosystem, provides a variety of tools and packages for R natural language processing. From sentiment analysis to topic modeling, R offers a plethora of functionalities that cater to all aspects of NLP.

R’s large suite of packages makes it a formidable tool for NLP tasks. Here are some of the standout packages:

Understanding the capabilities of each package can help users unlock the full power of R for NLP. Here’s a closer look at some of the key packages:

tm: Often considered the cornerstone of text mining in R, the tm package provides functionalities like removing punctuation, stemming, and term-document matrix creation. It’s a must-have for anyone starting with text analysis in R.

textclean: Just as its name hints, it’s super handy for tidying up and prepping your text data. It’s got these cool features that help with stuff like mending broken UTF-8 codes, sorting out URLs, and even ironing out those pesky typographical mistakes. Honestly, if you’re diving into raw and unorganized text, you’re going to want textclean by your side.

tidytext: If you’re into text analysis with R, you’ve got to check out tidytext. It’s this awesome package that brings the ’tidyverse’ philosophy right into the world of text mining. So, instead of wrestling with messy text data, tidytext lets you handle it just like you would with tidy data frames. Super handy for cleaning, analyzing, and visualizing textual data. Honestly, it’s a game-changer for anyone looking to make sense of words and phrases in a neat and tidy way!

sentimentr: It’s this super cool package that’s all about gauging the mood of your text. Instead of just counting positive or negative words, it’s smart enough to consider the context, making its sentiment scores way more on point. If you’re looking to get a real feel for how your text vibes – be it happy, sad, or anything in between – sentimentr is your go-to buddy. It’s made my text analysis so much more insightful!"

RTextTools: Think of it as your all-in-one toolbox for machine learning text classification. The best part? It syncs up beautifully with the tm package. So, if you’re in the mood to set up some advanced text classification routines, this package has got your back.

topicmodels: It’s all about topic modeling, which, in simple terms, helps you uncover hidden themes in a bunch of documents. With topicmodels, you get this neat gateway to the well-loved LDA and CTM topic models. So, if you’re looking to pull out some insightful topics from your text, this package is a game-changer.

tokenizers: Tokenization is a foundational step in NLP, and tokenizers excels in this domain. Whether you’re aiming to split text into words, sentences, paragraphs, or just lines, tokenizers is the go-to. It’s got this super intuitive API that’s not only easy to use but also super speedy.

syuzhet:Ever wondered about the feelings behind a story? That’s where sentiment extraction comes in, and let me tell you, syuzhet is a star player. Beyond just gauging sentiment, it can sketch out plot arcs, which is a dream for diving into stories, scripts, or any tale you’ve got. And with its range of sentiment dictionaries and cool ways to visualize, syuzhet is your all-in-one package for getting to the heart of emotions.

NLP; This package serves as a foundational tool for NLP in R. It provides the necessary building blocks for more advanced text processing tasks. Whether you need to tokenize, annotate or work with feature structures NLP is a component.

openNLP; By bridging R with Apache OpenNLP tools openNLP showcases the power of machine learning in NLP. It offers a range of capabilities including tokenization, sentence splitting and named entity recognition. If you’re looking for an accurate NLP toolkit openNLP is definitely worth considering.

udpipe: Rooted in the Universal Dependencies framework, udpipe is a one-stop-shop for a myriad of NLP tasks. Be it tokenization, lemmatization, or part-of-speech tagging, udpipe delivers with efficiency and accuracy. Its comprehensive suite of functionalities ensures that users have all the tools they need for in-depth text analysis.

text: It’s crafted for diving deep into human language, using some of the coolest tricks from NLP and DL, especially those transformer techniques. What’s neat is that it gives you the flexibility of a modular approach to tap into top-notch language models, but also offers a complete solution tailored for in-depth human analysis. And guess what? Whether you’re working with a tiny dataset or a massive one from the social sciences, its user-friendly functions have got you covered.

quanteda; This package is specifically designed for analysis of data in R. It offers a comprehensive set of tools for efficient text processing, analysis and visualization. Users can preprocess texts by performing tasks like tokenization, stemming and stopword removal. These processed texts can then be converted into a Document Feature Matrix (DFM) which’s suitable, for types of analyses. The package offers functions for calculating statistics, on text such as term frequency and document frequency. It also allows for visualizing patterns in text measuring similarities between texts and using techniques like correspondence analysis and topic modeling. Developed with efficiency, in mind quanteda is highly capable of handling datasets and seamlessly integrates with other well known R packages ensuring a seamless workflow when analyzing text.

R’s strengths in NLP are manifold:

Rich Ecosystem: R R offers a comprehensive suite of packages specifically designed for NLP endeavors. Whether your focus is sentiment analysis, topic modeling, or text classification, R has a dedicated package to cater to your needs.

Data Visualization: R’s prowess in data visualization is noteworthy. With tools like `ggplot2``, visualizing intricate textual data, from word distributions to sentiment metrics, becomes both intuitive and insightful.

Community Support: The R community is known for its active user base. With the growth of natural language processing in R, the community has expanded, leading to better support, more comprehensive documentation, and continuous improvements in the available packages.

Interoperability: A significant advantage of R is its adaptability. It can effortlessly interface with other programming languages and tools. So, even if a specific NLP feature isn’t native to R, it’s straightforward to integrate functionalities from languages like Python.

NLP is a challenging domain due to the inherent complexities of human language. Idioms, sarcasm, homonyms, and cultural context can all pose challenges for NLP algorithms. Here’s how R addresses some of these challenges:

Preprocessing Tools: Packages like textclean and stringi offer robust tools to clean and preprocess text, ensuring that the data fed into NLP algorithms is of high quality.

Advanced Algorithms: R provides access to some of the latest algorithms in NLP, ensuring that users have the tools they need to tackle complex NLP tasks.

Integration with Deep Learning: With packages like keras and textTinyR, R users can leverage the power of deep learning for NLP tasks, tapping into advanced models like BERT and Word2Vec.

Scalability: As datasets grow larger, scalability becomes a concern. R addresses this with packages like data.table and ff, which are designed to handle larger-than-memory datasets. For NLP tasks, this means that R can handle large corpora of text without breaking a sweat.

To truly appreciate R’s capabilities in NLP, let’s explore some real-world applications:

Background: Twitter, one of the leading microblogging platforms, enables users to express views, follow favorite personalities, and engage in tweet/retweet activities. However, many users often remain unaware of the emotions underlying their tweets or those of the personalities they follow. A group of researchers collected tweets from multiple celebrity accounts to analyze the sentiment behind them.

Solution: This research approached the challenge by treating it as an emotion classification problem using the R programming language. The methodology involved:

sentimentr Package of R to delve deeper into personality insights, moving beyond just categorizing tweets as positive, negative, or neutral.Outcome: The applied methodology successfully estimated a range of emotions behind tweets, such as happiness, sadness, anxiety, joy, anger, surprise, and fear. The nuanced analysis allows for insights like determining if a user with predominantly negative tweets is actually happy or joyous. Conversely, if there are more negative than positive tweets, it suggests the user might be sad, angry, anxious, or fearful. This research’s primary contribution lies in its ability to identify emotions and discern the evolving trends of personalities or Twitter users. You can read more about it here.

Background: Imagine being a hotel manager and wanting to know what your guests truly think. In the era of “Tourism 4.0”, where the focus has shifted from sellers to buyers, understanding the guest’s perspective is paramount. This case study delves into the vast world of online accommodation reviews, specifically from Korean customers. With a dataset of 104,161 online reviews, the challenge was to uncover the hidden topics that guests frequently discuss.

Solution: To tackle this challenge, a team of researchers turned to the power of the R programming language. Using a combination of R packages, including stringr for string operations, tidyverse for data manipulation, and tm for text mining, they preprocessed the massive dataset. The hunspell package helped in refining the words, and the star of the show, the topicmodels package, was used to perform Latent Dirichlet Allocation (LDA) - a fancy term for extracting topics from large volumes of text. This approach allowed the team to sift through the sea of reviews and pinpoint the key topics that guests were talking about.

Outcome: The results were eye-opening! While traditional factors like location and service quality were still top of mind for guests, the study revealed more nuanced insights. For instance, when comparing city accommodations to rural ones, or modern hotels to traditional Korean “hanok” accommodations, different priorities emerged. These insights offer a treasure trove of information for anyone in the hospitality industry, providing a clearer roadmap to meet and exceed guest expectations.

The field of NLP is rapidly evolving, and R continues to adapt to these changes. With the rise of LLM models like LLaMA and GPT-4, there’s a push towards integrating these models into the R ecosystem. Packages like transformers are making strides in this direction, providing R users with access to state-of-the-art NLP models.

Furthermore, as the field of NLP moves towards more unsupervised and semi-supervised learning methods, R’s rich statistical modeling heritage makes it well-suited to adapt to these changes.

R offers a comprehensive toolkit for those looking to dive into natural language processing R. Its packages and functions simplify complex NLP tasks and offer powerful visualization techniques. As the field of NLP continues to grow and evolve, R remains a valuable tool for researchers, data scientists, and anyone interested in extracting insights from text.

For those interested in further reading, check out these sources:

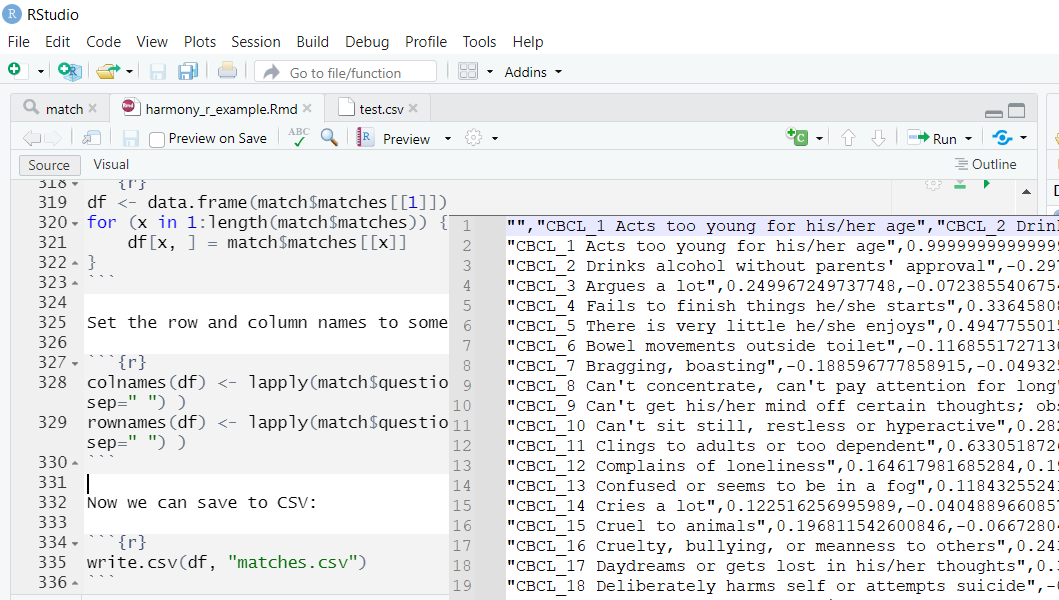

“Fine-tuning” means adapting an existing machine learning model for specific tasks or use cases. In this post I’m going to walk you through how you can fine tune a large language model for sentence similarity using some hand annotated test data. This example is in the psychology domain. You need training data consisting of pairs of sentences, and a “ground truth” of how similar you want those sentences to be when you train your custom sentence similarity model.

Hire an NLP developer and untangle the power of natural language in your projects The world is buzzing with the possibilities of natural language processing (NLP). From chatbots that understand your needs to algorithms that analyse mountains of text data, NLP is revolutionising industries across the board. But harnessing this power requires the right expertise. That’s where finding the perfect NLP developer comes in. Post a job in NLP on naturallanguageprocessing.

Natural language processing What is natural language processing? Natural language processing, or NLP, is a field of artificial intelligence that focuses on the interaction between computers and humans using natural language. NLP is a branch of AI but is really a mixture of disciplines such as linguistics, computer science, and engineering. There are a number of approaches to NLP, ranging from rule-based modelling of human language to statistical methods. Common uses of NLP include speech recognition systems, the voice assistants available on smartphones, and chatbots.